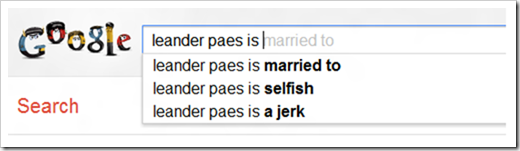

If you or someone with the same name as yours has had a brush with controversy and generated lots of online attention, chances are that some of Google’s search suggestions will give you sleepless nights.

The Leander Paes example possibly shows some of the mildest of the rude suggestions — there are far more disturbing ones that appear for other instantly recognizable personalities.

Keeping a Check on Autocomplete

There have been several instances of people taking Google to court to remove uncomplimentary suggestions about themselves.

One of the newest cases coming to light is that of Germany’s former first lady, Bettina Wulff, who has now taken on Google in a defamation case. Google’s suggestion algorithm looks up related queries previously made by users to help provide a better experience, and it ended up associating the words ‘escort’ and ‘prostitute’ with Ms Wulff.

Earlier this year, a man in Japan successfully obtained a court order for Google to remove mentions of criminal activity which he claimed were linked with his name through the autocomplete feature. According to him, the criminal acts were done by someone with the same name, and he believed this made it hard for him to find employment.

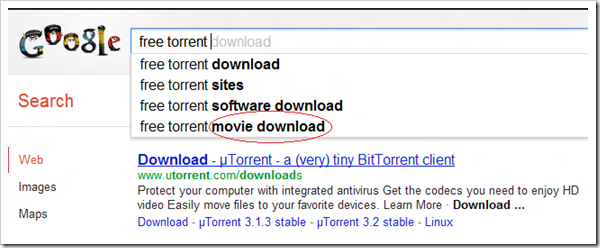

There has been intense pressure from anti-piracy campaigners to stop Google from aiding downloads of pirated music and movies through the autocomplete suggestions. Google agreed tojoin the fight to protect copyrighted material, promising changes to their autocomplete algorithm among three other major tweaks to aspects linked to the piracy headache. However, the search engine giant didn’t consider completely removing the piracy related results from its index.

These autocomplete changes were announced in late 2010, but it is not clear to what extent they are supposed to work.

This is what I got after typing “free torrent”:

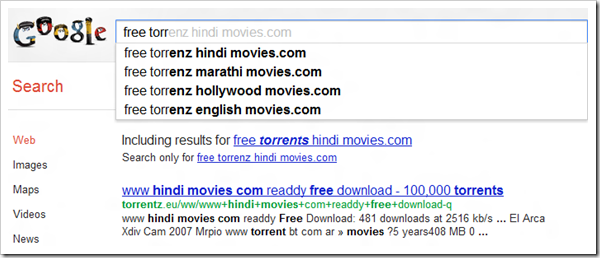

When I typed “free torr”, Google includes misspellings of the word “torrent” in its suggestions and also helps me out with a friendly message, “Including results for free torrents hindimovies.com”

Torrent technology is used to legitimately distribute certain software, but has almost become synonymous with piracy because of its widespread use in illegally distributing music and movies.

A Tricky Frontline

On similar lines as the social media realm, Google’s autocomplete information revolves around trends among users.

In cases like that of Ms Wulff, rumors are seen to dominate the scene. And, it is not uncommon to see nasty hints appearing while looking for information on people and places, a clear reflection of what is going on in the minds of many people.

The fact of the matter is that racists, hate campaigners and rumormongers have existed long before Google came into being. Contemporary technology has brought all their evil thoughts right at our fingertips, since features like autocomplete only showcase the views of end users.

This problem has opened an interesting frontline in the censorship debate. It would be understandable for Google to block out extreme cases of profanity and racism which stand out easily and threaten to pollute the search experience.

But what about tricky cases of misspellings and transliterations from other languages?

Google doesn’t have a systematic complaint registration system that can look into cleaning up the junk appearing in its autocomplete mechanism, and such controls would be impossible to manage, given the fact that over 35,000 searches are made every second.

When there is hatred and rumormongering manifest in everyday social media and internet tools, the real root of the problem lies in society itself.

Instead of calling for tight control of what information appears before us, the very tools that are hit by pollution can be used to educate people not just to use the internet responsibly, but to help refine socially acceptable behavior as well.

What do you think?

No comments:

Post a Comment